Machine learning is increasingly empowering surgical robotics. For example, innovations like Medtronic’s Hugo integrate AI-driven video analytics such as Touch Surgery. These tools can segment procedural steps in real time, provide actionable insights to surgeons, and when trained on diverse representative clinical data, help avoid bias in critical decision making.¹ ² ³ However, not all systems fully exploit video context. Unless designed for temporal modeling or memory, AI may still process each frame largely in isolation, potentially missing procedure-wide continuity.⁴ ⁵

The Glitch in the System

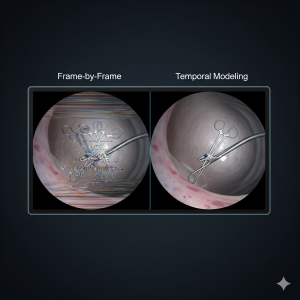

Here’s another way to visualize it: a surgeon is working inside a patient, and the ML system is making a prediction for every single frame of the video. It’s like flipping through a flipbook, page by page. This might work fine on a good day, but surgery is anything but static and sometimes unpredictable. Tissue shifts, a bit of blood can obscure a view, and instruments fly across the screen in a millisecond. And as recent work in medical imaging shows, such glitches can be amplified when training data underrepresent differences in anatomy or skin tone, leading to preventable misclassifications.¹⁵

When the AI doesn’t have a sense of time, it creates a handful of frustrating and risky problems. For instance:

- What happens if a crucial nerve or vessel is identified correctly in one frame, only to vanish in

the next, and reappear two seconds later? AI is making independent predictions for each frame, which can lead to inconsistencies across time.⁵

the next, and reappear two seconds later? AI is making independent predictions for each frame, which can lead to inconsistencies across time.⁵ - Imagine a fast-moving tool momentarily disappearing between frames. This isn’t just a nuisance, it creates a blind spot that could compromise patient safety and equity of outcomes, particularly for underrepresented groups if models are not trained on diverse data.⁶ ¹³

In many current surgical AI systems, each prediction is based primarily on single-frame analysis. Unless explicitly designed with memory or temporal modeling, the AI may struggle to carry context across time, which can limit its ability to fully understand the flow of a procedure.⁵

The cumulative effect is a heavy cognitive load on the surgeon. Instead of a steady, reliable partner, the surgeon gets a distracting, inconsistent overlay. In a high-stakes environment, even a brief flicker can break their concentration and erode their trust in the technology.⁶

The Hidden Costs of Unreliability

A systematic review pooling data from over 3.3 million da Vinci-assisted surgeries up to late 2024 found a 1% overall malfunction rate, with 0.09% leading to surgical conversion and 0.01% (330) resulting in patient injury. This shows high reliability despite mostly minor technical hiccups.⁷ Although large-scale studies don’t capture every type of transient issue, anecdotal reports suggest intermittent glitches may be underreported.⁸

This reveals a critical need for change. If we want surgeons and patients to fully trust and rely on these systems, the technology has to work flawlessly and be trained on representative data to ensure equity in outcomes, not just in theory, but in the fast-paced, unpredictable reality of the operating room.¹⁴

Giving the AI a Memory

A potential solution is to give the AI a memory. Temporal modeling architectures treat surgical video as a sequence, not a stack of photos. Instead of processing one frame at a time, these models learn how each moment connects to the next, building a richer, more stable understanding of the scene.⁵

Researchers are pursuing several approaches:

- Recurrent models (LSTMs/GRUs) provide short-term memory, helping the AI ‘remember’ what happened seconds earlier.⁵

- SlowFast Networks can capture both rapid tool movements and the slower context of the procedure.⁹

Transformers are now the leading approach, capable of modeling long-range dependencies and understanding the entire surgical flow.¹⁰ ¹¹

For surgeons, this means steadier overlays, fewer distracting flickers, and more reliable confidence signals. Beyond visualization, temporal models also enable real-time phase recognition and complication detection.¹⁰ ¹¹ For patients, the benefits are safer procedures and greater confidence that robotic assistance is working for them and not against them. The payoff is clear: lower cognitive load on the surgeon and a stronger foundation of trust between human and machine.

The Future of Surgery is Now

The global surgical robotics market is projected to expand from over $8 billion today to more than $20 billion by 2030, driven by rapid innovation and investment.¹² Yet sustained adoption depends heavily on surgeon and patient trust. Technical fragility like inconsistent visual overlays or transient tool disappearance can erode confidence and undermine safety. Temporal modeling is not merely an incremental improvement but a foundational requirement for clinical reliability. To realize the full promise of surgical robotics, future systems must move beyond frame-by-frame perception and embrace models capable of capturing the procedure as a continuous, coherent sequence developed with population-diverse training data to ensure equitable patient safety and unbiased reliability.³

Call to Action

Engineers have a critical role in shaping the next generation of surgical AI. To build the temporal modeling skills needed in this space, they can look to accessible training like the NVIDIA Deep Learning Institute (DLI) for video analytics or the free Stanford CS224n course, which introduce the foundations of computer vision and sequence modeling. Beyond coursework, real-world experience is available through competitions such as the MICCAI EndoVis Challenge, where researchers and engineers test temporal models on surgical video benchmarks.

But technical skill alone is not enough. To ensure equity, engineers can engage with organizations that promote inclusivity in AI, including Black in AI, LatinX in AI, and Women in Machine Learning, each of which provides mentorship, workshops, and collaboration opportunities. Similarly, initiatives like STANDING Together focus directly on creating representative health datasets and setting standards for fairness in clinical AI.

By combining structured learning, hands-on challenges, and inclusive community building, engineers can drive surgical robotics toward systems that are not only more technically reliable, but also safer and fairer for all patients.

Works Cited

-

Medtronic. “Medtronic Hugo™ Robotic-Assisted Surgery (RAS) System.” Medtronic Newsroom, 2023, https://news.medtronic.com.

-

Touch Surgery. Touch Surgery Enterprise. Digital Surgery, 2023, https://www.digital.surgery.

-

van Genderen, Matthijs, et al. “The Imperative of Diversity and Equity for the Adoption of Responsible AI.” Frontiers in Artificial Intelligence, vol. 4, 2025. https://www.frontiersin.org/journals/artificial-

-

Twinanda, Andru Putra, et al. “EndoNet: A Deep Architecture for Recognition Tasks on Laparoscopic Videos.” IEEE Transactions on Medical Imaging, vol. 36, no. 1, 2017, pp. 86-97.

-

Jin, Yueming, et al. “Temporal Models for Surgical Scene Understanding: Phase Recognition and Tool Tracking.” International Journal of Computer Assisted Radiology and Surgery, vol. 18, 2023, pp. 729-742.

-

Topol, Eric. “High-performance Medicine: The Convergence of Human and Artificial Intelligence.” Nature Medicine, vol. 25, 2019, pp. 44-56.

-

Zhang, Tao, et al. “Malfunctions in Robotic-assisted Surgery: A Systematic Review and Pooled Analysis of Over 3.3 Million Procedures.” World Journal of Urology, 2025. https://link.springer.com/content/pdf/10.1007/s00345-025-05732-z.pdf.

-

Arora, Sonal, et al. “Surgical Errors and Adverse Events with Robotic Surgery: A Systematic Review and Meta-analysis.” BMJ Open, vol. 10, no. 6, 2020, e037260.

-

Wang, Christoph, et al. “SF-TMN: SlowFast Temporal Modeling Network for Surgical Phase Recognition.” International Journal of Computer Assisted Radiology and Surgery, vol. 19, 2024, pp. 113-127.

-

Chaudhry, Muhammad Atif, et al. “MS-AST: Multi-Scale Action Segmentation Transformer for Surgical Phase Recognition.” arXiv preprint, 2023, https://arxiv.org/html/2306.08859.

-

Li, Yuyuan, et al. “LoViT: Long Video Transformer for Surgical Workflow Analysis.” arXiv preprint, 2023, https://arxiv.org/abs/2305.08989.

-

Fortune Business Insights. Surgical Robots Market Size, Share & Growth Report, 2030. Fortune Business Insights, 2024. https://www.fortunebusinessinsights.com/industry-reports/surgical-robots-market-100948.

-

Ahmad, Waqas, et al. “Equity and Artificial Intelligence in Surgical Care: A Comprehensive Review of Current Challenges and Promising Solutions.” Journal of Clinical Surgery Research, 2023. https://www.neliti.com/publications/592186/equity-and-artificial-intelligence-in-surgical-care-a-comprehensive-review-of-cu.

-

Ratwani, Raj M., et al. “Patient Safety and Artificial Intelligence in Clinical Care.” JAMA Health Forum, vol. 5, no. 3, 2024, e240160. https://jamanetwork.com/journals/jama-health-forum/fullarticle/2815239.

-

López-Pérez, Alejandro, Søren Hauberg, and Aasa Feragen. “Are Generative Models Fair? A Study of Racial Bias in Dermatological Image Generation.” arXiv preprint, 2025. https://arxiv.org/abs/2501.11752.

Leave a Reply